The discussion surrounding AI search usually revolves LLMs and their answer quality and platform technology. Google has impressively demonstrated its leadership in both of these areas with Gemini 3, the model now ranked number 1 on the LMArena Leaderboards .

One crucial factor is often overlooked: the quality of the back-ends . No matter how powerful an LLM is, without access to current, comprehensive information from the web or other data sources, its responses will be limited. It’s in this area where a massive difference between ChatGPT and Google’s AI Mode becomes apparent.

Links in responses – what our data shows

We analyzed over 10 million responses from ChatGPT and Google AI Mode that our in our SISTRIX AI databases to understand how both competitors handle web searches.

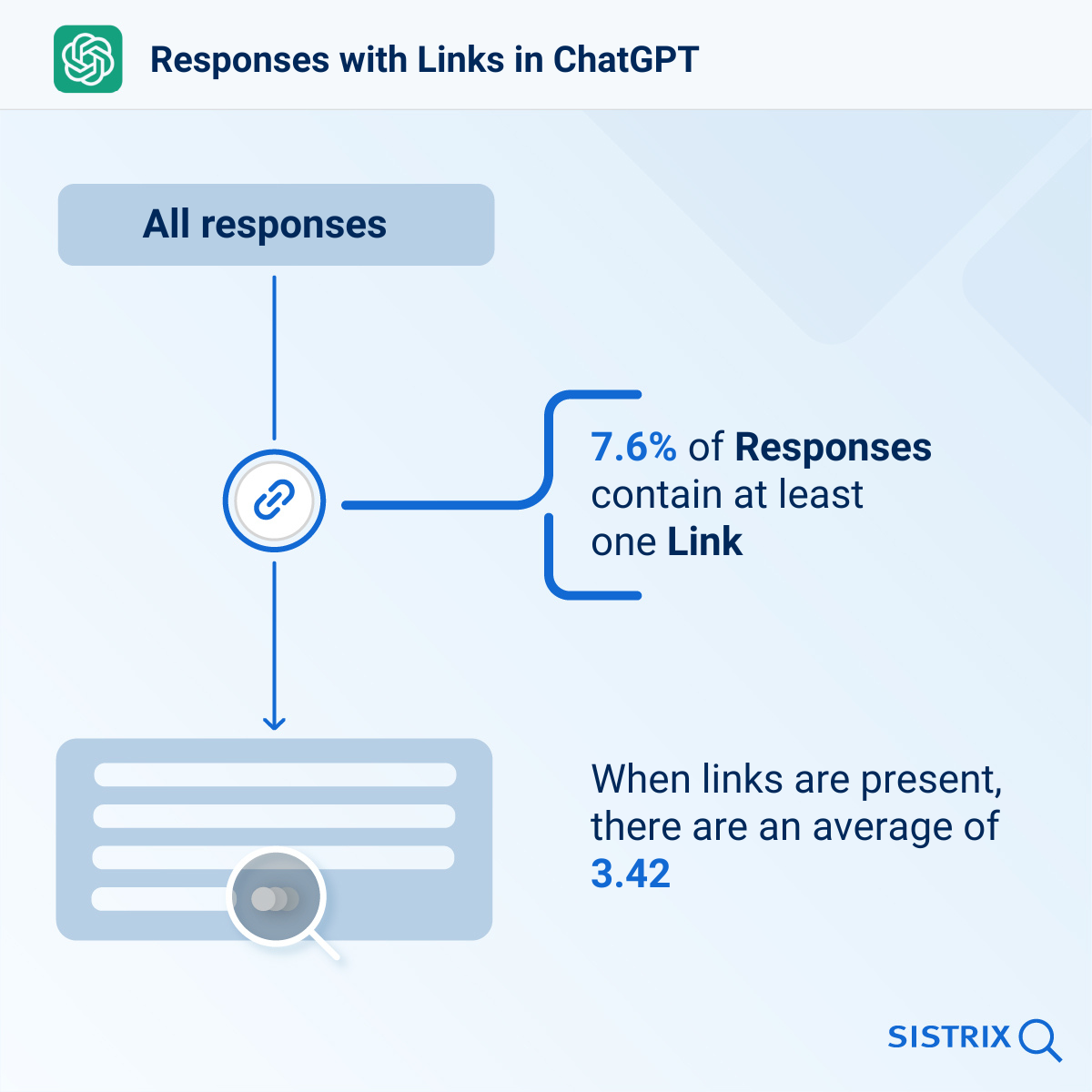

The result: In the publicly available version of ChatGPT, a web search is performed in only 7.6% of prompts. In over 90% of cases, ChatGPT answers solely from its trained knowledge. When links are displayed, there are an average of 3.42 sources per answer.

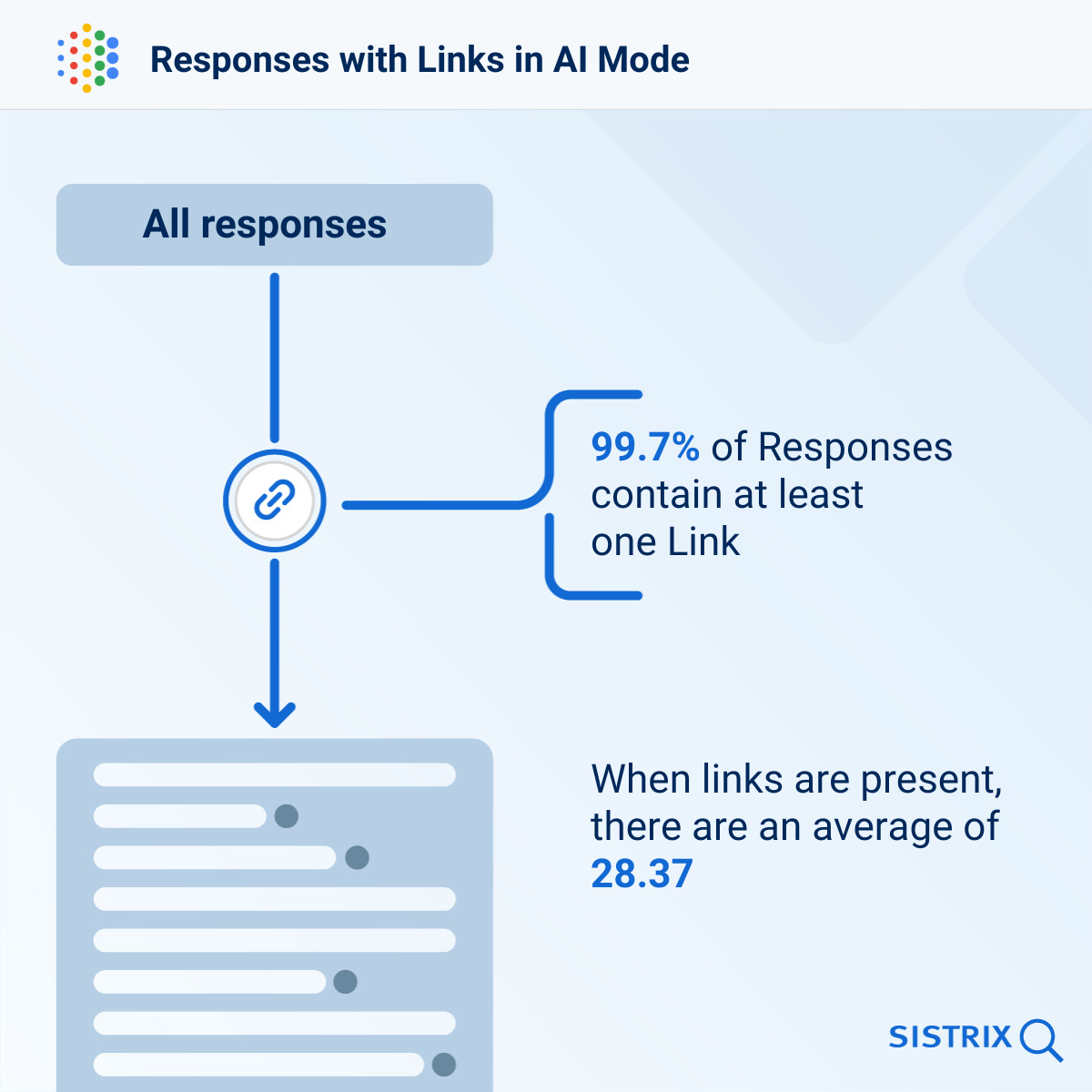

Google AI Mode works fundamentally differently: Here, a web search is performed for 99.72% of the prompts and the answers contain an average of 28.37 links , more than eight times the number compared to ChatGPT.

Why this will become a problem for OpenAI

These figures highlight a structural advantage that Google possesses: over 20 years of experience in building search infrastructure, crawling, and databases. This infrastructure cannot be replicated in just a few years.

For ChatGPT, this means that even with an excellent language model, many answers remain based on the most recent training data-set. Up-to-date information, prices, news, or fact checks: all of this requires web search. And this is precisely where Google has an advantage that cannot simply be overcome with better algorithms.

My assessment: OpenAI faces a difficult strategic challenge. With ChatGPT, they built the first good language model, but in AI search, they’re fighting against a competitor that has controlled the field for two decades. Without massive investments in search infrastructure, it will be difficult to catch up.