Large Language Models (LLMs) such as ChatGPT, Gemini, or Claude can generate remarkably fluent text. They are capable of answering questions, summarising content, and even producing entire articles. Yet despite these abilities, there is a key weakness – their responses are not always accurate. LLM answers sound convincing but can contain errors or entirely fabricated information. This is where the concept of grounding comes in.

Discover how SISTRIX can be used to improve your search marketing. Use a no-commitment trial with all data and tools: Test SISTRIX for free

To ensure that LLMs provide more than just plausible-sounding answers, answers that are verifiable and traceable, they need a concept known as grounding.

What Does Grounding Mean?

The term grounding originally comes from cognitive science. There, it describes the question of how symbols or language are connected to the real world. A word like “apple” is initially just a symbol; it only acquires meaning through its association with an actual object that we can see, smell, or taste.

Applied to language models, grounding means that the model’s outputs are linked to verifiable information. Rather than relying solely on patterns from training data, the response is “anchored” in real data or sources.

Using SISTRIX to analyse sources used in LLMs

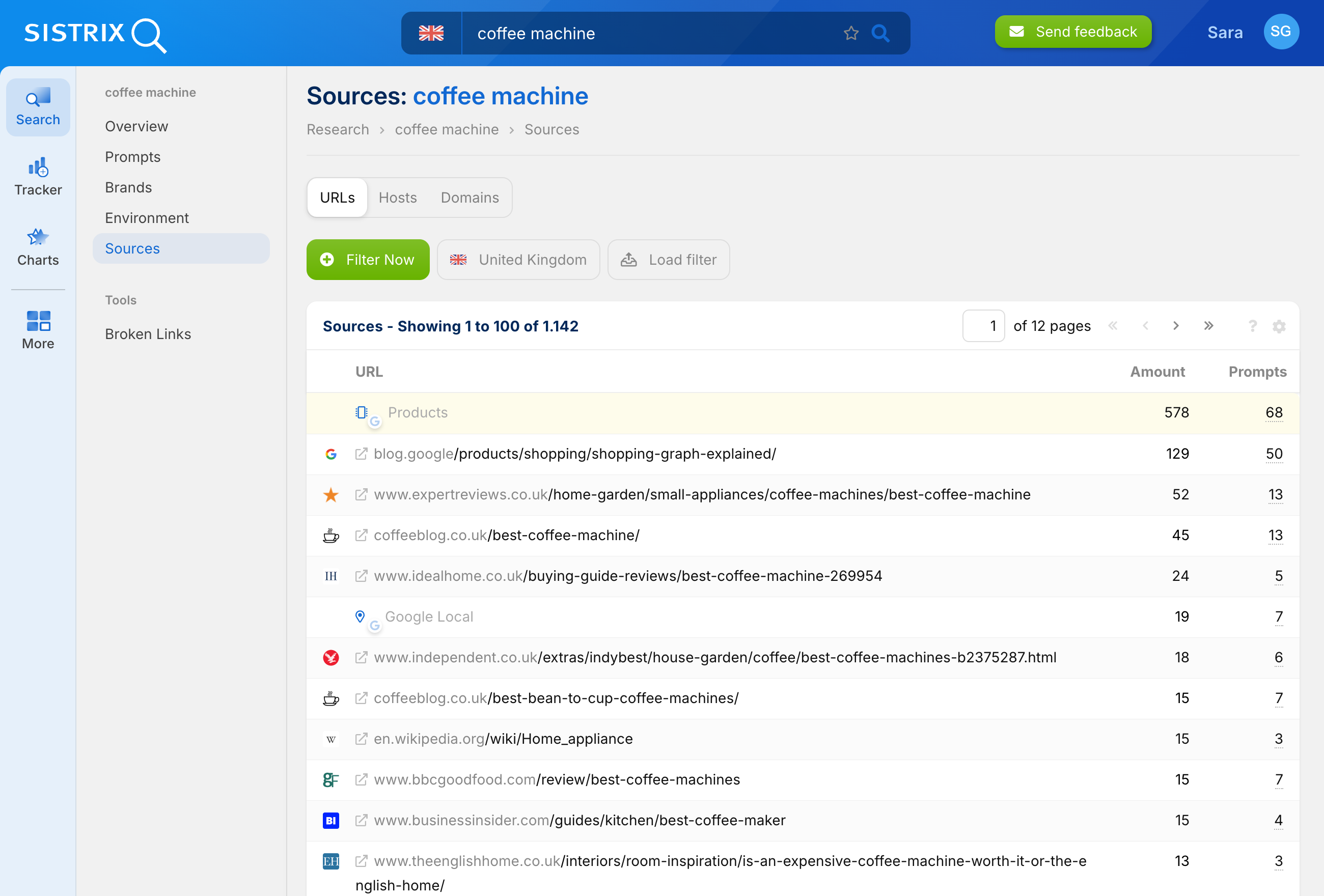

To understand how language models use information, it is not enough to look at the answer alone. What matters is whether sources are cited — and which ones. This is exactly what can be analysed in the SISTRIX AI/Chatbot Beta.

SISTRIX shows which sources are used when searching for an entity, how large each source’s competitive share is, the visibility index of the sources used, and how their distribution develops across the different systems.

For the analysis of grounding, this is an important step. Only when it becomes visible which sources language models actually use can we assess how credible or verifiable an answer is. This allows brands to see whether their own content is being used as a trusted source and which competitors are cited more frequently.

Technical background

How LLMs work without grounding

A language model predicts the next word in a text based on probabilities. It does not know whether the content is correct as long as it appears linguistically plausible. This means an LLM can produce very convincingly written but factually incorrect answers. This is also a fundamental difference between AI chatbots and search engines: no documents are being searched — only probabilities are being calculated.

Methods of Grounding

There are several approaches to solving this problem:

- Retrieval-Augmented Generation (RAG):

Before responding, the model searches external documents, databases, or search systems. This information is then incorporated into text generation. - API or database integration:

The model is connected directly to structured data sources. Example: an LLM retrieves the current exchange rate from a financial database instead of inventing a number. - Multimodal grounding:

Here, language is linked to perceptual data such as images or audio. This is mainly relevant in research and plays only a minor role in SEO applications.

An example illustrates the difference:

- Without grounding

Question: “What is the current inflation rate in Germany?”

LLM answer: “The inflation rate is around 2.5 percent.”

→ This figure sounds plausible but could be completely wrong, because the model derives it solely from language patterns. - With grounding

The model accesses data from the Federal Statistical Office and provides the correct, up-to-date inflation rate, including a source citation.

For the SEO context, this means that texts created with grounding can be supported by real data and facts. This significantly increases their quality and reliability.

Why Grounding Matters for SEO

- Avoiding errors in content: Anyone creating AI-assisted content risks unintentionally publishing incorrect facts. This can seriously damage a website’s credibility. Grounding reduces this risk.

- Strengthening authority and trust: Google evaluates content according to E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness). Source citations and verifiable information play a crucial role in this. Grounding ensures that content not only sounds good linguistically but is also based on facts.

- User experience and search intent:

Searchers expect precise and reliable answers. Grounded content meets this expectation better than generic AI-generated text. This increases user satisfaction and can have a positive impact on rankings.

Challenges and Limitations

- Data quality: Grounding is only as reliable as the sources it relies on. Incorrect or outdated data leads to inaccurate content.

- Complexity: Implementing grounding systems is technically demanding and requires interfaces with data sources.

- No guarantee: Even with grounding, models can still make mistakes — but the likelihood is significantly reduced.

Outlook: Grounding is essential for high-quality AI

The importance of grounding will continue to grow in the coming years. As AI becomes increasingly integrated into content processes, the pressure to provide reliable and verifiable information rises. For SEO, this means that data quality and transparent source citations will become even more critical success factors.

Anyone using AI for content creation should not view grounding as optional, but as a necessary foundation for high-quality SEO content.

Test SISTRIX for Free

- Free 14-day test account

- Non-binding. No termination necessary

- Personalised on-boarding with experts