In most cases, it is unlikely that rankings have been lost. However, this question very much depends on when the website was excluded.

If a site cannot be indexed, Google cannot show this page in the search results. The first consequence of this is lost rankings.

If the de-indexing did not take place long ago, it is very likely that Google will reinstate the old rankings of your URLs after you have the pages indexed again.

In this case, you should always work through the following three questions, one after the other:

- At what point did the ranking drop / the page no longer appear in the index?

- Why are the pages no longer in the index?

- What do I do after eliminating the errors?

When did the ranking collapse?

If you noticed only yesterday that a page is no longer in Google’s index, the first step is to check when the page was removed from the index.

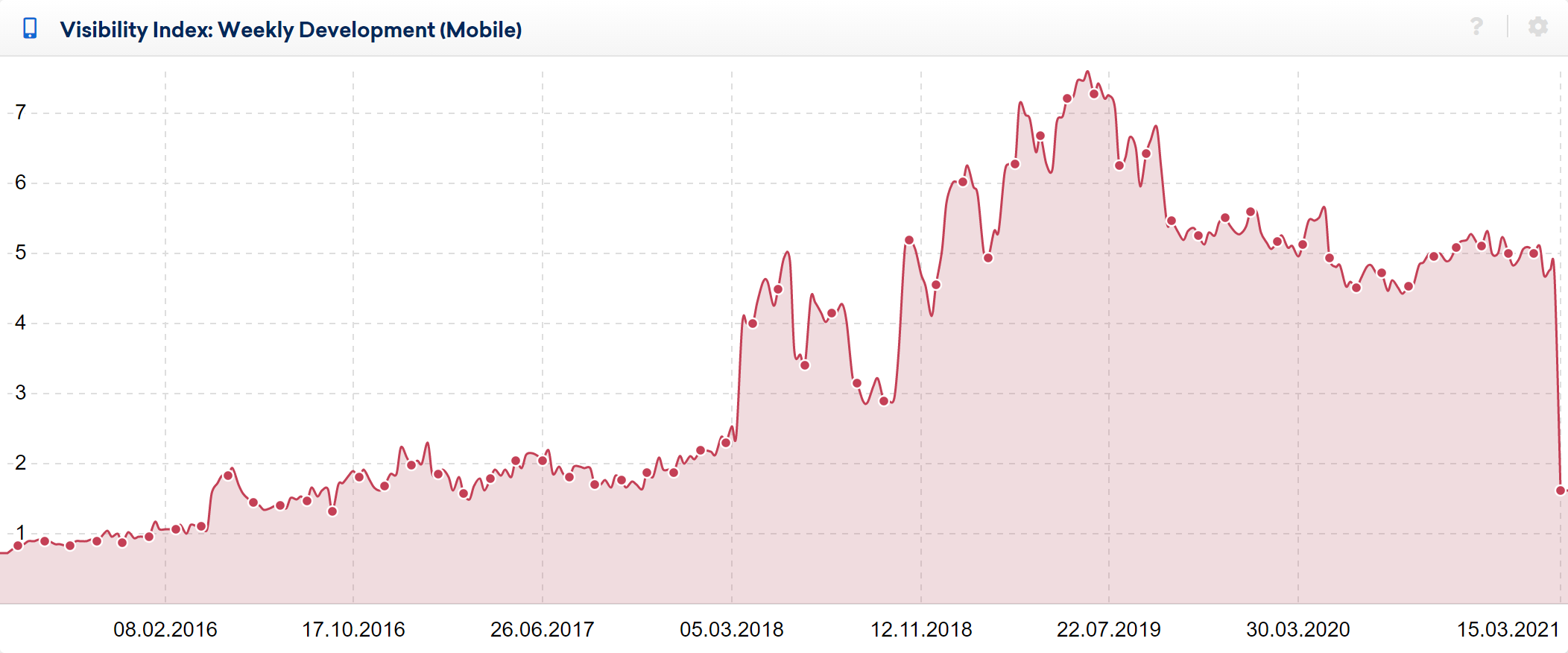

To find out when the page dropped out of the index, you can consult the Indexing Status report in Google Search Console or check the visibility index history of the domain in the SISTRIX Toolbox.

Since the Visibility Index reflects how visible a domain is in Google search results, it will show corresponding losses if there are no results.

In this example, a massive drop in visibility can be seen:

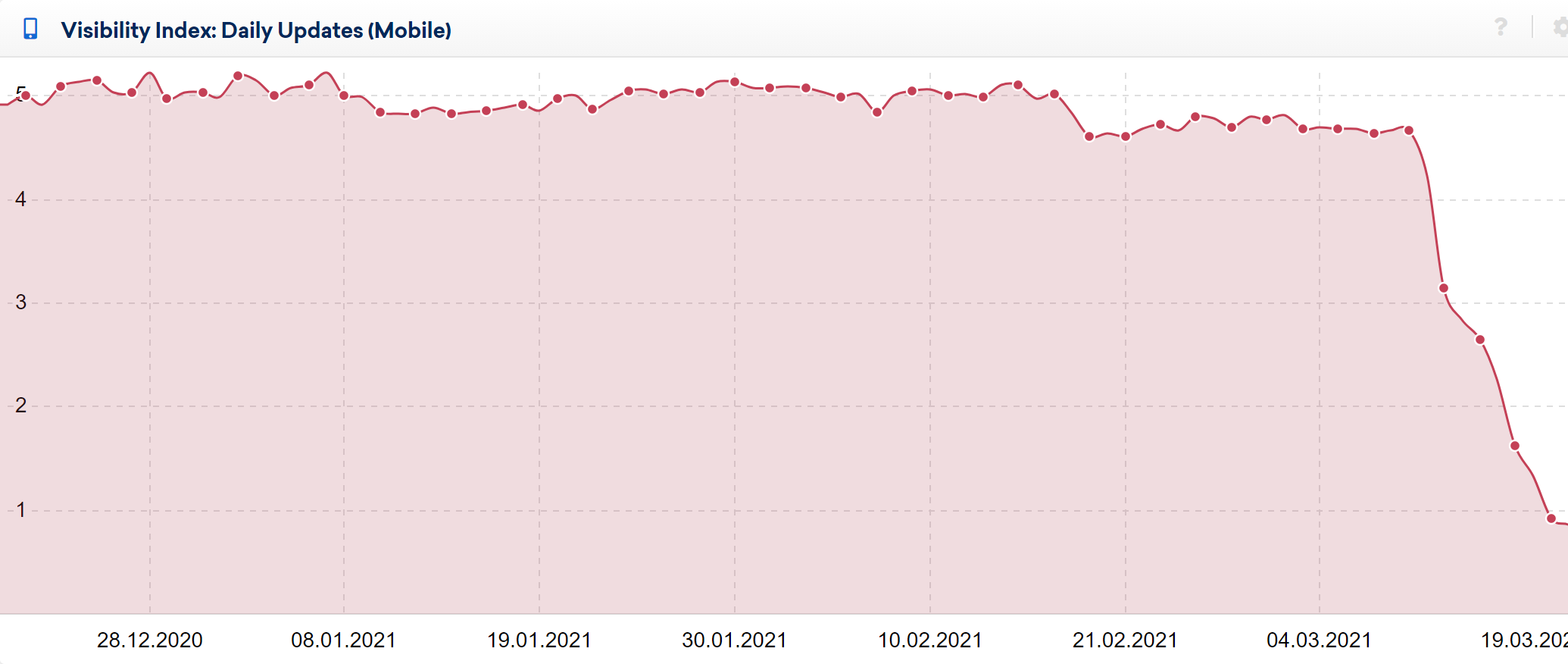

If the crash occurred less than 30 days ago, you can see even more precise information about the date in question in our daily Visibility Index history:

We can accurately say here that visibility plummeted between 09.03.2021 and 19.03.2021 and, armed with this knowledge, start to undertake the detective work.

Why are the pages no longer in the index?

At this point, we’re assuming that the website is actually available and running. Unpaid bills and server fires can cause very low-level problems!

The robots.txt

The first port of call should always be the robots.txt file on your site. This is where the GoogleBot can be stopped from crawling pages, and if Google cannot crawl a page, it cannot be added to the index – there are certain exceptions here, however.

The NoIndex statement

It is then worth visiting your own website to check whether the page was set to noindex by accident. This can sometimes happen with just a single checkbox in the content management system. In rare cases, the noindex statement is not found in the source code of the page, but in the server response via the X-Robots-Tag.

Technical problems

Once these two sources of errors have been checked, it is worth checking if there have been any technical issues. Are there, for example, server errors, or do automatic queries lead to the exclusion of the querying IP after a short time? In order to be able to answer such questions, it is worth asking the technical department directly, or having the page crawled with the SISTRIX Optimizer. This automatically crawls your website, and you can see in the ‘live log’ during the crawl whether the server is delivering a lot of 400 (access denied) or 500 (server problems) status codes.

Conclusion

If you have checked these three sources of error, you will in most cases find out why the page is no longer in Google’s index.

Now it’s time to put in some hard work and fix the errors!

What do I do after fixing the errors?

Once the reasons that are preventing Google from crawling/indexing the page have been addressed, the next step is to have Google crawl and index the page again as soon as possible.

You can use the sitemap feature in Google Search Console to request Google to add them to the index. There are other methods of notifying Google about a sitemap but it’s important know that if you have already submitted the sitemap, it may not trigger Google to recrawl them. More information is available from Google.

As soon as Google has crawled and indexed the pages again, the search engine must check to see if the pages have changed. However, if the pages are still identical to the version before deindexing occurred, Google can usually recognise this very quickly and will restore the URL to its old ranking.