One of the tools available to SEOs, that can be used to help with steering crawlers through your projects, is the robots.txt file. This file is one of the oldest instruments available, with which you can ask crawlers to keep out of specific areas of a website. During day-to-day operations, it can often be very useful to make changes to the robots.txt in order to test new settings or find problems. Sadly, there are cases when these changes cannot be made for numerous reasons.

In order to make such tests as easy as possible and give you a much more nuanced way of steering the optimizer crawler through your project, the Optimizer now offers a virtual robots.txt file, for you to use.

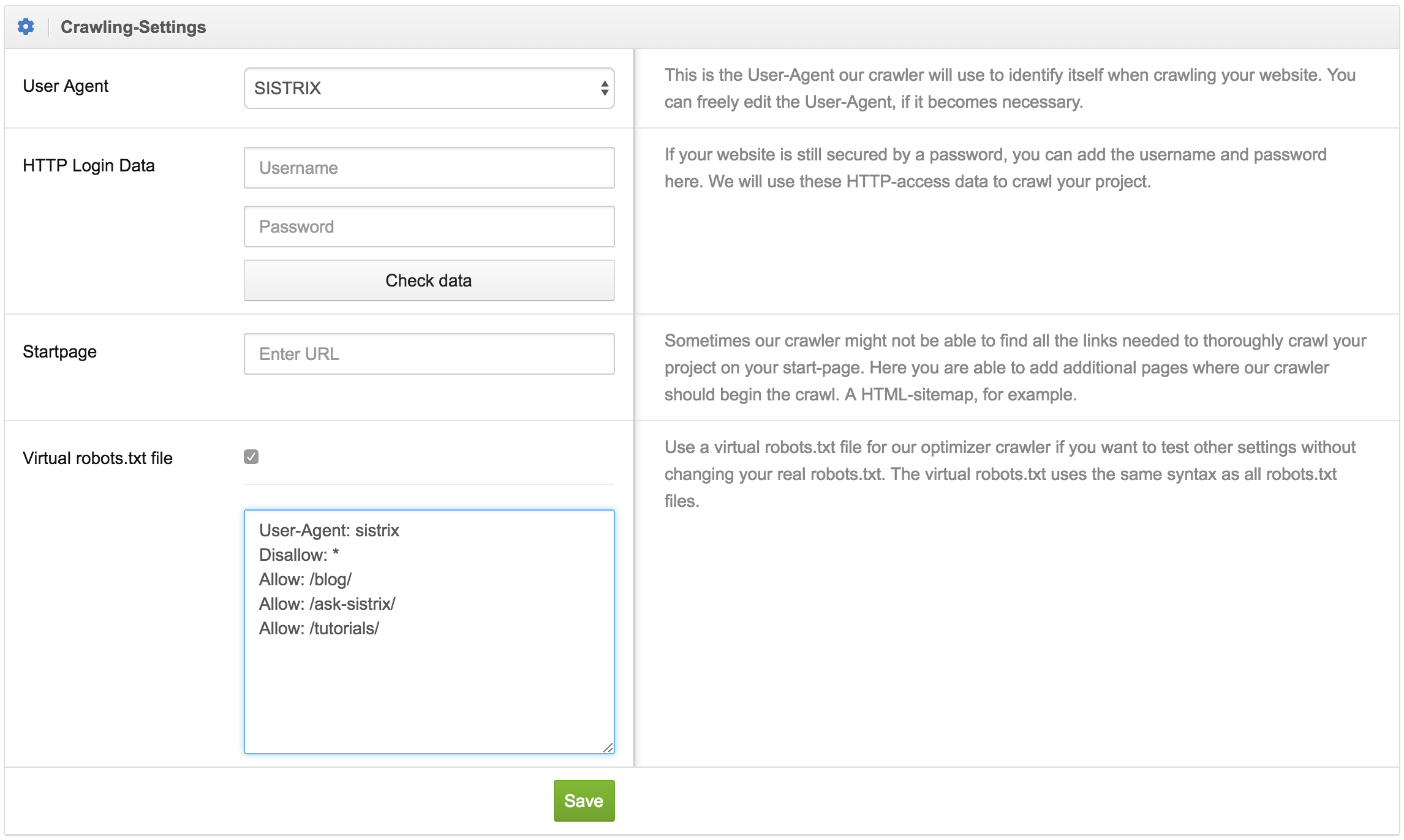

Just go to “Project-Settings > Crawler” and there you can select the option “Virtual robots.txt file”, if you want to define your own robots.txt instead of using the real one for the project.

Once you set the check-mark you can type-in the content for the virtual robots.txt file. You can use the same syntax for the virtual file, as you can for a real one.

The next time your project is crawled, which you can manually start right after setting up the virtual robots.txt file, the system will use the content of the virtual file instead of looking for the real one.

One use-case for this feature would be if you want to crawl only a few, specific directories on your project.